Want to build your own AI voice assistant from scratch? The good news is, it's totally possible today. Artificial intelligence has grown so fast that things once seen as science fiction are now part of everyday life.

Big names like Amazon Alexa, Google Assistant, and Apple Siri set the bar. But with today's large language models, voice generators, and easy-to-use APIs, startups and small teams can create voice agents that fit their needs. The voice assistant market is expected to reach $15.8 billion by 2030, so this is a great time to jump in and create one yourself.

We've studied the technology from every angle and put it into practice. So now, we want to pass on what we learned. In this guide, you’ll learn:

- A step-by-step process to create an AI voice bot

- The essential features every modern AI personal assistant should have

- Expert tips for adding AI voice cloning, handling audio input, and connecting smart home gadgets without the hassle.

Here are some things you need to know before we start.

Who this guide is for:

- SMBs building customer-facing products

- Product teams experimenting with AI technology

- Support and contact-center teams exploring an AI voice bot

- Internal teams creating a custom voice assistant

What types of products can you build with this guide?

- Voice MVP: A basic version with FAQs and a few actions. Usually takes about 6–10 weeks to build.

- Enterprise pilot: A more advanced setup that connects to IVR, CRM, or ERP systems. Commonly takes 12–16 weeks.

- Multilingual support agent: Can be ready around the same time, depending on how big the project is.

- Custom AI personal assistant: Designed for internal workflows or special tasks.

Where this approach isn’t a fit:

- Low request frequency: This method isn't great if people don't use it often. It’s because it might not be worth the time or cost to set up.

- No access to data: If the system can't access the data it needs, the voice assistant won't work well.

- Strict offline or air-gapped environments: Places without internet or with isolated networks prevent the AI from handling requests instantly.

We’re Oleh Komenchuk – a Machine arning Engineer at Uptech, and Andrii Bas – Uptech co-founder and AI expert. Let's get to it!

What are AI Voice Assistants and their types?

AI voice assistants are software that uses generative AI, machine learning, and natural language processing to interpret verbal commands and act on them. From setting an alarm to ordering deliveries, AI assistants can vary in technologies, purpose, and complexity.

Let’s explore the common ones.

Chatbots

They are apps that let you converse with AI through a chat interface but might also incorporate speech recognition technologies. For example, ChatGPT started as a generative AI chatbot but recently allowed users to interact verbally. Instead of typing their questions, users can speak directly to the chatbot and get a verbal response.

Voice assistants

Voice assistants are apps primarily designed to listen to users' commands, interpret them, and perform specific tasks. Alexa, Siri, and Google Assistant are examples of popular voice assistants that people use in daily life.

Specialized virtual assistants

Some AI voice assistants are designed for specific industries, which we call specialized virtual assistants. For example, a medical chatbot that converses with patients and schedules their appointments is a specialized virtual assistant.

AI Avatars

AI avatars put a face to the voice you hear from an AI chatbot. They are the animated form of characters or people that users can see when interacting through voice commands.

How to Develop an AI Voice Assistant Step by Step

Despite its simplicity, an AI voice assistant isn’t easy to build. There are layers of complexities beneath the simple chat interface that require highly specialized skill sets. Moreover, to create your own voice assistant, you need to consider security, compliance, scalability, adaptability, and more.

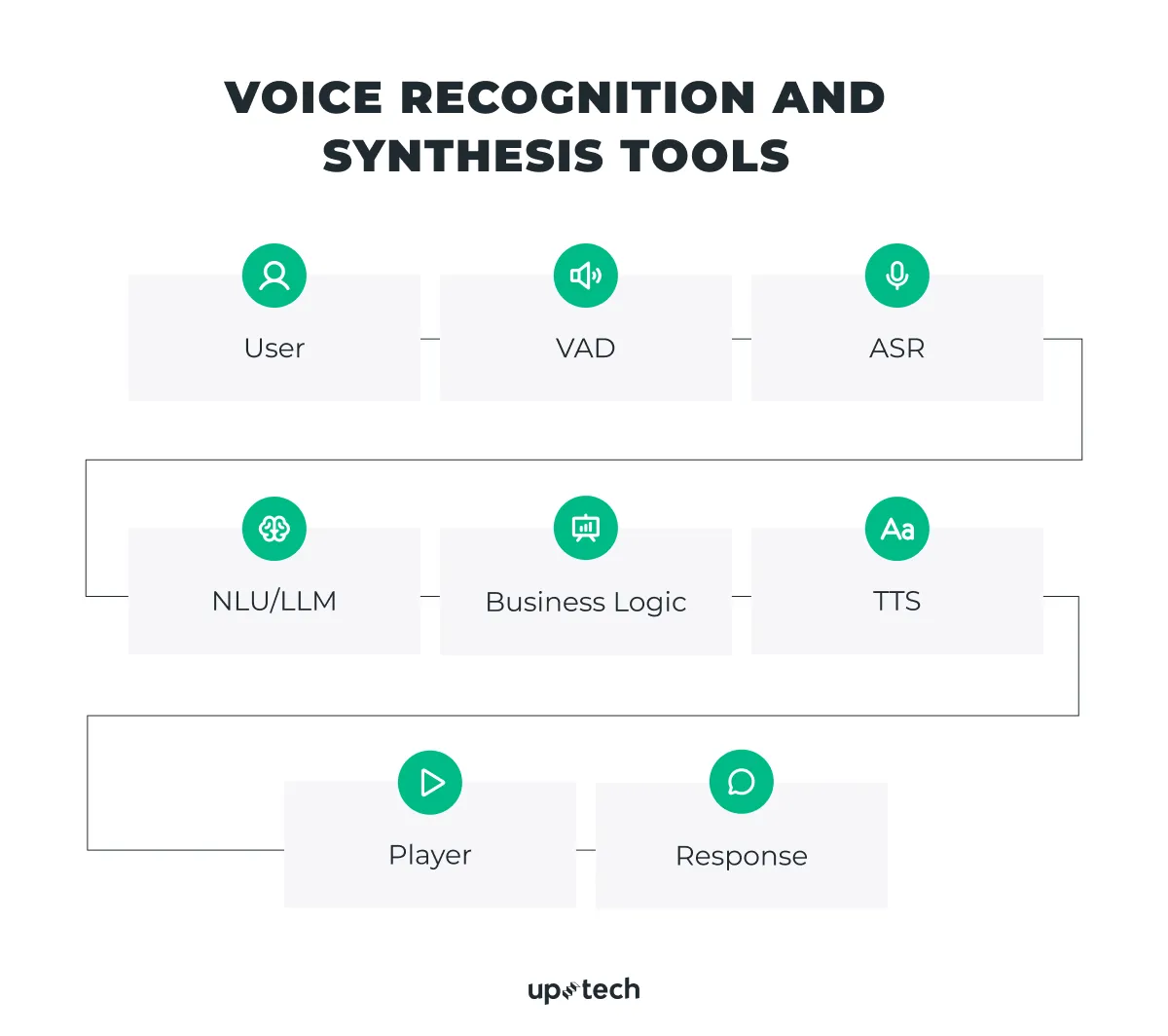

The diagram below shows our process for building a virtual personal assistant.

Step 1: Define your AI Assistant’s purpose

First, establish how the AI assistant adds value to the user experience. It’s important to determine if building or integrating a voice assistant clearly helps the user fulfill their goals. Otherwise, you might spend a hefty fee for an AI feature that serves very little purpose.

If you’re not sure whether users will find an AI assistant helpful, conduct a survey and interview them. Present your ideas, or better still, visualize how a voice assistant could help improve their app experience. When interviewing your target users, ask probing questions, such as:

- Can an AI voice assistant simplify the user’s interaction?

- Will it be helpful in solving their problems?

- How can the voice assistant integrate with existing capabilities?

Collect feedback, validate your ideas, and revise them. From there, you can determine features that you want to include in the AI voice assistant. We’ll explore this in depth later on as it’s an important topic.

Step 2: Choose the right technology stack

Next, determine the tech stack your AI voice assistant requires. Usually, this includes exploring a list of artificial intelligence and software technologies necessary to provide natural language understanding capabilities in your app.

Natural language processing libraries

Natural language processing (NLP) is one of the crucial pillars of AI voice assistants. When building such apps, you’ll need NLP libraries, which provide readily available frameworks that allow apps to interpret text more effortlessly. For example, Transformers library, spaCy, NLTK, and Hugging Face offer comprehensive NLP libraries that AI developers can leverage.

Machine learning libraries

Besides comprehending user commands, voice assistants need ways to process them intelligently. That’s where machine learning libraries like TensorFlow and PyTorch prove useful. Instead of building from scratch, AI developers can use and integrate pre-built AI functions with the app’s logic instead of building them from scratch.

Voice recognition and synthesis tools

Another critical tech stack that AI voice assistants can’t function without is voice recognition and synthesis frameworks. Voice recognition technologies enable the AI assistant to capture voice commands and turn them into a format that machine learning and NLP modules can process.

Meanwhile, audio synthesis tools turn the output into natural, audible voice responses for the users. Some frameworks that might help you with your development effort are CMU Sphinx and Google Text-to-Speech.

To take things a step further, here’s a high-level look at assembling a modern AI voice agent:

- VAD (Voice Activity Detection): Figures out when you start talking.

- Streaming ASR (Automatic Speech Recognition): Turns audio input into text instantly.

- NLU / LLM + function calling: Understands what you mean and calls the right tools or APIs if needed.

- Business logic: Applies rules, workflows, or integrates with other systems.

- Streaming TTS (Text-to-Speech): Converts the response of the AI personal assistant into speech.

- Player: Plays the audio back to you.

To create a good AI voice assistant, you need to include key features that help it interact naturally, such as the following:

- Barge-in (Lets the user interrupt and give a new command without waiting)

- Wake-word detection

- Silence and error handling

- Intent ranking

- Fallback to a human agent

Moreover, a helpful assistant should connect with other systems and do tasks reliably, like:

- Calendars, ticketing systems, and payments

- Connected via webhooks or function calls

- Idempotency to make sure commands don’t run twice

The assistant works better if it can use the right knowledge to answer questions. This depends on features such as:

- Retrieval-Augmented Generation (RAG) helps the personal assistant to give more accurate answers.

- Cited answers where possible

- Places to store information (databases, vector stores)

Finally, you need to decide whether the assistant runs on your device or online, as it affects speed, privacy, and cost:

- On-device: Faster and more private, but needs more expensive hardware and has limited computing power.

- Cloud: Easier to scale and cheaper per device, but slower, costlier over time, and less private.

All of these elements combined make your AI voice bot feel natural, quick to respond, and dependable.

Programming languages

While AI frameworks are crucial, your developers need to bring them together. To do that, they write codes to integrate the app, backend services, and machine learning algorithms. Here are the most popular programming languages that developers use for AI development:

- Python is arguably the most popular language in which AI developers program. It’s easy to learn, has a strong community, and provides developers access to an expensive library of frameworks.

- Java, on the other hand, is known for its scalability and flexibility. You can use Java to build cross-platform apps or add AI features to an existing software infrastructure.

- C++ is helpful if you want to build an AI virtual assistant that requires more control of the device's native capabilities and performance. Developers use C++ for low-level programming, especially in robotics, games, and computer vision applications.

We know that determining which programming language to use for a specific AI app is not easy. Therefore, we share how they compare to each other in the table below.

Step 3: Collect and prepare data

Before you build an AI voice assistant, you need to train the underlying AI model. To do that, developers must prepare the appropriate training data. For the model to develop voice processing capabilities, you need two types of data: audio and text.

- Audio data allows the virtual assistant to process and interpret different languages, speaking styles, and accents.

- Textual data lets you train the language model to understand the contextual relationship and meaning of various commands.

It’s important to recognize that the quality of training data directly influences the voice assistant’s performance. Knowing what type of data to collect is crucial. Usually, when creating a voice assistant, we collect voice recordings from various speakers.

We want to create a fairly distributed training data sample that realistically represents how people speak in real life. So, some of the recordings might contain background noise, which the AI model must learn to filter out.

After collecting the speech samples, you’ll need to transcribe them into text and label them. That’s because we train AI voice assistants through supervised learning. During training, the machine learning algorithm attempts to match the voice to the task it should perform through the annotations.

Let’s say you want the AI voice assistant to open the Gmail app when hearing “Open Gmail”. You’ll need to specify the context in the labels so the AI algorithm can associate the command with the output.

Step 4: Preprocess and clean data

We want to stress again that if you train your AI assistant with low-quality data, you’ll get subpar performance. That’s why it’s crucial to put the data you collected through two stages: preprocessing and cleaning.

Data preprocessing

Humans speak differently than the way they write. Often, verbal speech isn’t optimized for machine learning algorithms. Our day-to-day conversations may contain filler words, repetition, silence gaps, and other irrelevant artifacts that might hamper model learning.

Therefore, data scientists preprocess the data by removing unnecessary audio parts. They also tokenize the data and remove stop-words to ensure the training data contains only the audio parts the model needs.

Data cleaning

Excessive noise or background chatter can prevent developers from training the model. Therefore, developers might need to filter background noise or other audio elements to ensure optimized model training.

Tips from Uptech: Throughout the entire data preparation, be mindful of data security and ethical considerations.

- Ensure the audio data is diverse enough to fairly represent your user’s demographics.

- Secure data collected and prepared to train AI models.

- Comply with relevant data privacy acts such as HIPAA and GDPR, if your business operates in highly regulated industries like finance or healthcare.

Step 5: Train your AI assistant

The next phase in creating a personal AI assistant is developing its speech recognition capabilities. Typically, you’ll need to put the AI model through these processes.

Model training

Train your AI assistant so it can learn to understand the speech and business context it’s meant to serve. This involves feeding the AI model with volumes of training samples you’ve prepared.

During the training, the speech recognition model analyzes and learns linguistic patterns, which it can recall later. Once trained, the speech model can converse naturally with users in language and tone that humans understand.

The problem with training a model is the immense computing resources required. You’ll need to invest in special AI hardware, such as GPU and TPU, to train and deploy the AI models. On top of that, it might take weeks or months before you can adequately train a model.

Fine tuning

At Uptech, we don’t recommend every business to train an entire speech recognition model from scratch. This process is costly and takes significant time that SMB owners can’t afford. In most cases, you can benefit from fine-tuning pre-trained models from AI providers like OpenAI.

When you fine-tune a model, you allow the model to learn new information while retaining its existing capabilities. For SMBs, fine-tuning is the better approach in terms of time and cost. For example, you can use Whisper, a multilingual pre-trained speech recognition model trained on over 680K of audio data.

Then, fine-tune the model with commands and information related to your business. This way, you can quickly get the AI assistant to the market without spending an astronomical amount of money on development.

Model evaluation

Can you confidently deploy an AI model you’ve trained? Rather than relying on guesswork, evaluate the trained model. Compare the model’s result with industrial benchmarks to ensure the model is accurate, consistent, and responsive when responding to user commands.

We have a comprehensive guide where we explain how to build AI software from scratch and dive deep into how to prepare the data, train it, and tune it. Check it out!

Step 6: Design the UI/UX

If you want your AI-powered voice assistant to meet user expectations, having an eloquent chatbot isn’t enough. Instead, you’ll need to pay attention to the UI/UX elements of your app so that it engages users throughout their interaction.

Remember, users want to solve their problems seamlessly. That means mapping out their journey from the moment they sign up for the app. Proper choice of colors, layouts, fonts, and visual elements helps to a certain extent. However, you should also focus on conversational flow.

When you create an AI voice assistant, you must anticipate various scenarios that users might encounter. For example, they might ask questions to which the AI model cannot satisfactorily respond. In such cases, decide what the model should do, such as courteously presenting other options to the user, to ensure you give the opportunity for the user to solve the problem even if AI can't.

To incorporate conversational design, follow these tips:

- Identify the common queries that users ask and map the conversation for them.

- Avoid jargon or overly technical language. Instead, use common words that laypersons understand.

- Ensure that the voice assistant responds naturally instead of adopting a rigid or robotic style.

Here’s our expert article on how to build a conversational AI. Check it out for more insights!

Step 7: Develop or integrate

As your AI experts train the voice assistant, your development team can simultaneously work on the user-facing app. Usually, this involves creating an independent AI voice assistant app or integrating the capability into an existing one.

Develop an independent AI app

If you create an AI assistant app from scratch, you’ll need to go through the entire software development lifecycle. And that includes discovery, planning, resource allocation, development, and testing. You need to consider not only the AI features but also the app's business logic.

But if you already have some software that can potentially cover the task, you might consider an easier solution.

Integrate with an existing system

Instead of building a new app, you can integrate the voice assistant into an existing one. For example, you already have an eCommerce app that users love, but you want to add a voice assistant that helps them shop better. To do that, you can train the AI voice assistant and use APIs to integrate it with the existing app.

Of course, you need to ensure you current app can support AI integration. It might need some updates, refactoring, or even complete rebuild. And sometimes rebuilding is more difficult than creating something new from scratch.

We help our partners find the most efficient approach to building the app or preparing it for the integration. That’s why many business owners outsource AI development to a reliable partner like Uptech.

Step 8: Test and debug

Don’t deploy your app until you’ve satisfactorily tested it for bugs, security, and other issues. Deep learning models and speech recognition technologies are still improving and may occasionally produce unhelpful responses. Besides, users might be concerned about data security and privacy when you introduce a new feature, especially AI.

So, make app testing a priority and not an afterthought. At Uptech, we continuously test the AI engine and app throughout the development. To ensure the final product is stable, accurate, and consistent, our QA engineers run several types of tests, including:

- Unit tests, which ensure all individual software modules are developed according to specifications.

- Integration tests check for compatibility issues when multiple services are combined together.

- Security tests, where we perform static and dynamic analysis to uncover vulnerability risks in the codes, libraries, and modules the app uses.

- System tests provide a holistic view of the app.

Verification Order and Target Metrics

When testing a custom voice assistant, it helps to follow a clear, step-by-step order. This way, you can make sure each part works well before moving on to the next step.

- ASR quality and latency

- NLU accuracy

- Integrations with APIs and services

- Load and performance under expected usage

Key metrics to track (using typical/target ranges rather than hard numbers) include:

Step 9: Deploy and support

Once you’re satisfied with the QA reports, prepare to deploy the app. Depending on the type you’ve built, you’ll need to upload it to AppStore or Play Market. Also, your developers will need to update the database and backend services you host in the cloud infrastructure.

Now, don’t rest on your laurels after launching the app. Be prepared for any incidents, such as latent bugs or usability issues, that might arise in the subsequent days. Get your support team on standby so they can immediately respond to user requests.

Step 10: Make improvements

Like for any product, you must monitor user feedback, trends, and technological shifts so you can improve the AI assistant accordingly. For example, users may want to perform more tasks with voice commands, which requires building or revising certain app functions.

While you continuously optimize the app to remain relevant, pay attention to data security, compliance, and other ethical concerns.

What Are the Principles of Voice Conversation Design?

Designing natural voice interactions is just as important as the AI technology itself. A well-designed conversation makes your assistant easier to use and more dependable. Here are some key guidelines to remember:

- Short TTS: Keep text-to-speech responses brief (1–2 sentences). Break longer instructions into smaller steps.

- Repeats/Clarifications: Ask users to repeat unclear input. Example: “I didn’t catch the city. Please repeat.”

- Confirmations: Use implicit or explicit confirmations. Example: “Move it to Friday at 10:00 — correct?”

- Session Memory: Give a quick summary before taking action to maintain context.

- No Screen Considerations: When users can’t see a display, make the options easy to follow and clearly say things like ‘repeat,’ ‘help,’ or ‘agent.’

- Multilingual and Accents: Support different languages or voices. Next, help it get familiar with various accents and the specific words your domain uses.

Here’s a quick table with tips on what to do and what to avoid when designing AI voice bot interactions.

What’s Different About Enterprise Voice & IVR

Making a top-quality AI personal assistant or voice agent takes more than just being able to understand and talk. Big companies also need it to be reliable, follow rules, and work with the tools they already use. Here are the key things to pay attention to:

- Integrations: Enterprise assistants need to connect with the main tools agencies already use. This includes CRMs (e.g., HubSpot, Salesforce), ERPs, billing systems, helpdesk platforms (e.g., Zendesk, Freshdesk), and phone systems (e.g., SIP/WebRTC, IVR gateways). Always include a DTMF backup (keypad tones) for situations the system can’t handle automatically.

- Event Bus: Use webhooks or message queues so different parts of the system can talk to each other. Add safeguards like idempotency keys and deduplication so actions don’t get triggered twice by mistake.

- Deployment: Many BFSI and healthcare organizations often require on-premises or self-hosted setups. Make sure you have solid key management and logging controls in place.

- Operations: Be ready for enterprise-level operations, such as meeting service-level objectives and agreements (SLOs/SLAs) and planning for system capacity. You should also track per-minute costs for speech recognition, text-to-speech, and telephony usage.

Who really needs enterprise mode:

- Businesses that must follow strict rules or keep data in certain locations

- Companies with lots of calls or big contact centers

- Teams that depend on complicated CRM, ERP, or phone system integrations

- Regulated industries like banking, finance, and healthcare

Develop a Custom Solution or Use an AI Assistant Builder?

If you want to create an AI voice assistant, you have three choices: a full custom solution, a hybrid approach, or a builder-based MVP. Each has advantages and risks, so it would help if you understand each one before making a decision.

Full Custom Solution

A fully custom personal assistant lets you build it while keeping your business needs in mind. You get to control every feature, process, and connection to other tools.

Good for: Big companies or projects that need a lot of customization and complete control over data and privacy. Firms with complex system connections can also benefit from it.

Risks: Expensive, takes longer to launch, and needs strong technical skills.

Hybrid Approach

This option combines ready-made AI assistant tools with custom microservices for special features.

Good for: Small or mid-sized teams that want to build faster but still add some custom features.

Risks: A bit more complicated than using just ready-made tools and needs some development skills, either in-house or outsourced.

Builder-Based MVP

A builder-based MVP uses drag-and-drop platforms to create a prototype quickly without much coding.

Good for: Testing ideas fast and checking if people like them. Projects with a small budget.

Risks: It’s hard to scale or customize. Also, you’re limited to what the platform allows.

Check out this table to see the pros and cons of each approach, so you can choose the best way to build your AI personal assistant.

What ready-made solutions can you use?

If you want to quickly get an AI voice assistant for free, try VoiceFlow and SiteSpeakAI. They are a popular voice chatbot builder that many SMBs use in their organizations. VoiceFlow lets you train a voice assistant with business knowledge bases and deploy in channels like Whatsapp, Discord, and Slack. Meanwhile, SiteSpeakAI is a builder for creating an AI-powered support agent that can converse verbally with users.

Check out how both AI chatbot builders compare below.

What Features Should You Include in Your Voice Assistants?

These features are essential to create a purposeful AI voice assistant.

- Automatic speech recognition. It allows the app to accurately interpret spoken words and transcribe them to textual form.

- Natural language understanding enables the AI assistant to understand contextual relationships and converse naturally in various scenarios.

- Task automation links specific commands to actions that users want to accomplish.

- Personalization lets every user customize their preferences, including style, commands, and responses.

- Information sources. The voice assistant can retrieve information to provide real-time updates or relevant domain-specific data.

Why Creating An AI Voice Assistant Is Worth It

No doubt, you’ll pay more if you create your own AI voice assistant. However, the benefits of doing so can make it a worthwhile investment for SMBs. We share some of the common perks if you choose to build one.

1. Personalization

You can personalize how the AI assistant interacts with app users. Because you’re not constrained by a third-party provider’s limitations, you can train the machine learning model to learn the user’s preference and converse in styles they’re comfortable with.

For example, if you’re integrating a voice assistant into a medical app, it’s better to build one that takes the patient’s sensitivity into consideration.

2. Increased efficiency

An AI voice assistant helps you automate mundane tasks more effortlessly. Let’s say you want to set a reminder. Instead of opening an app and jotting it down, you can command the voice assistant to do so without disrupting your workflow.

3. Data privacy

If you use an AI builder, you’re relinquishing control of data management to a third party. This, unfortunately, might breach compliance regulations in certain industries. Conversely, building your own AI voice assistant puts you in control of how the data are collected, stored, and secured.

4. Creativity

If your business is driven by creativity, building your own AI voice assistant will fuel your team further. Without being boxed by third-party constraints, they can freely ideate, experiment, and challenge existing conventions. Often, such freedom leads to breakthroughs and new products.

5. Scalability

A custom-built AI voice assistant is more scalable than an out-of-the-box chatbot from a third party. For the former, you can scale it to meet growing user demands. Meanwhile, you cannot expand, update, or customize a third-party voice assistant freely.

6. Innovation

You can be an early adopter of emerging technologies when you build your own AI voice assistant. Rather than waiting for updates from the chatbot builder, your developers can freely innovate to provide a competitive edge.

7. Integration

There is no restriction to third-party integration with your own voice assistant. You can introduce voice chat to other products you use as part of your strategic plan.

For example, if your AI voice chatbot proves successful in customer support, you can introduce it for work scheduling, inventory management, and other operational tasks.

How Much Does It Cost to Develop an AI Assistant App?

The cost of building a custom voice assistant depends on different factors. One of them is how complex you want the tool to be. For instance, an MVP with FAQ capabilities, a few actions, and a single channel typically ranges from $40-80k. A pilot version with IVR and CRM integration, as well as multilingual support and streaming features, usually costs between $100k and $200k.

Meanwhile, enterprise-grade solutions with on-premises deployment, complex integrations, and reporting often start at $250k and up.

Where your team is based changes the price, too. Eastern Europe offers a good mix of quality and price. Teams in Asia tend to be cheaper, so they're ideal for MVPs or pilot projects. U.S. teams cost more but are great for big, enterprise-level solutions.

The cost drivers don’t end there. Here are other things you need to consider when you create an AI voice bot:

- Channels supported (web, app, telephony): More channels mean more development, testing, and maintenance, so the price will naturally rise.

- Traffic volume: Many users interacting at once need extra processing power and bandwidth, which adds to the cost.

- ASR/TTS usage minutes: The more speech recognition and text-to-speech the system uses, the higher the API or server bills.

- LLM calls: Each call to a large language model increases computing and subscription fees.

- Security requirements (on-prem certifications): Stricter security or compliance rules, like GDPR or HIPAA, may require extra systems and audits. They may also need encryption. Because of this, costs are likely to go up.

- System integrations: Connecting to other systems like CRM, ERP, or payment platforms needs custom work. It also requires ongoing maintenance. So, the project becomes more complex and expensive.

To reduce costs, you can cache responses and text-to-speech output. You can also try using early guesses from speech recognition to make your AI voices respond faster. Or, you can train the AI with your specific vocabulary and reuse components across features or projects.

Uptech Tips for AI Voice Assistant Development

Over the years, we’ve helped business owners worldwide develop or integrate AI assistants with their products. Consider these tips to avoid potential complications and accelerate your development.

Implement sentiment analysis and natural language understanding

Both technologies are the core of a successful AI-based voice assistant. Sentiment analysis allows the app to interpret the underlying sentiment when a user expresses a verbal command. Meanwhile, natural language understanding is crucial to comprehend the contextual relationship of continuous conversations.

Utilize APIs for voice and command recognition

Without voice recognition, your AI assistant can only understand text-based commands. So, you’ll need to integrate a speech-to-text engineer into your app. To do that, we recommend using APIs. This way, you don’t require excessive coding or building the entire functionality from scratch. Instead, you can leverage pre-built functionalities from third-party speech recognition engineers to save time and resources.

Anonymize user data and regularly conduct security audits

Don’t neglect data security when you’re creating the AI assistant. Because it involves storing and processing volumes of data, the is a heightened risk of data leakage or cyber threats. Continuously assess your data security posture and apply protective measures such as encryption, anonymization, and application security tests.

Prioritize Security and Governance

Keeping data secure is crucial when you create an AI voice assistant. Protecting user info helps people trust your app and keeps you compliant. Here are some practical ways to safeguard your app and its data:

- PII redaction: Remove personal info from logs and recordings. Then, encrypt data and use role-based access control (RBAC). Also, maintain an audit trail.

- Dataset anonymization: Ensure any data used to fine-tune AI models doesn’t reveal personal info.

- Voice biometrics and liveness checks (when appropriate): Use them to prevent spoofing.

- Consent and retention: Ask for permission from users before recording. Also, explain how long the data will be kept. You should use a simple way to say no or leave, too.

- Regulatory compliance mapping: Follow rules like PCI, GDPR, and HIPAA with practical steps, not legal jargon.

At Uptech, we help businesses of all sizes build and improve AI voice assistants, whether it’s a simple prototype or a full-scale enterprise solution. Our team brings deep expertise in AI, system integration, and security, with a track record of delivering reliable, scalable voice assistants. We’ve successfully completed hundreds of projects, including generative AI-powered apps such as Tired Banker, Angler AI, and Dyvo AI.

If you’re looking to create or upgrade a custom AI personal assistant for your business, get in touch with our team, and we'll help you bring it to life.

FAQ

How do voice assistants work?

They listen to verbal commands from users, then, transcribe them into textual form and feed it to a language model, such as ChatGPT, which has been trained on domain-specific data. After understanding the context, intent, and command, the voice assistant would automate designated tasks and provide a response.

Can an AI Assistant be integrated with existing software systems?

It depends on whether your existing software can support the APIs and other requirements the AI assistant requires. If the software is built on a legacy framework, it likely needs a major revamp or a total rebuild. Otherwise, you can integrate an AI assistant with an app with APIs.

How long does it take to build an AI Assistant?

If you build a simple AI assistant capable of answering basic questions, it’ll take 2-3 months. But expect longer development time your app requires complex NLP, voice recognition, and system integrations. Some AI assistants that offer multi-lingual support and extensive customization might take more than 12 months to build.

.avif)